Continuing my adventure in AI, I wanted to be able to access my local AI Private AI server outside of my home. It’s great to be able to locally deploy Llama 3 on my gaming PC, as described in this post: https://maple-street.net/meta-llama-3-local-deploy-and-games-examples/. However, I wanted to be able to access my AI server on the go.

Initially, I tried using Windows Subsystem for Linux and Docker on my Windows PC to achieve this. However, I encountered issues with Windows allowing external access correctly. I have an R730xd in the basement with a nvidia card (verify cuda version support with your respective card) running on it, so I decided to build a VM dedicated for Llama and Open Web-UI. I was not too hopeful about having a usable AI as my NVIDIA card is over 10 years old, but thought why not?

See the following post where I discuss setting up GPU passthrough on ESXi this will work with any nvidia gpu: https://maple-street.net/poweredge-r720-and-nvidia-k4200-passthrough-to-virtual-machine/

Now, let’s get into the installation. At this point, you should have a Ubuntu bare metal system or virtual machine with an NVIDIA GPU of some kind.

Step 1: Update and Install Required Packages

sudo apt-get updatesudo apt install build-essential gcc dirmngr ca-certificates software-properties-common apt-transport-https dkms curl -y

These commands update the package list and install necessary packages for building and installing CUDA drivers.

Step 2: Add NVIDIA Public Key

curl -fsSL https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2204/x86_64/3bf863cc.pub | sudo gpg --dearmor | sudo tee /usr/share/keyrings/nvidia-drivers.gpg > /dev/null 2>&1

This command downloads the NVIDIA public key and adds it to the system’s GPG keyring.

Step 3: Add NVIDIA Repository

echo 'deb [signed-by=/usr/share/keyrings/nvidia-drivers.gpg] https://developer.download.nvidia.com/compute/cuda/repos/ubuntu2204/x86_64/ /' | sudo tee /etc/apt/sources.list.d/nvidia-drivers.list

This command adds the NVIDIA repository to the system’s sources list.

Step 4: Update Package Index

sudo apt update

This command updates the package index to include the newly added repository.

Step 5: Install CUDA Drivers

apt search cuda-driverssudo apt install cuda-drivers-550 cuda

These commands search for and install the CUDA drivers.

Step 6: Reboot

sudo reboot

This command restarts the system to ensure that the new packages are applied.

Step 7: Install Ollama

sudo curl -fsSL https://ollama.com/install.sh | shollama pull llama3

These commands download and install Ollama, including pulling the latest version of the model (llama3).

Step 8: Test Ollama Model

- Run

ollama run llama3 - (Optional) Test your installed Ollama model to ensure it is running as expected.

- When you’re done testing, exit with

/bye

Step 9: Install Open-UI (with Snap)

sudo apt-get install snapsudo snap install open-webui --beta

These commands install Snap and then install the Open-UI package in beta mode.

That’s it! You should now have Ollama installed on your Ubuntu 22.04 system with Snap, along with CUDA drivers and Open-UI.

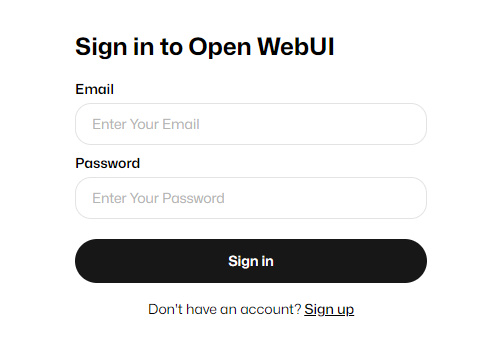

To test go in your web browser go to your servers ip address port 8080. Example http://192.168.1.6:8080

You will be prompted with a login for the first time select setup your first user will be the admin user.

Now once logged in you will see your own chat gpt type interface for free. All your data will be private and local. You then can load your model at the top and start your adventure.. Continue further if you would like to expose your site to the outside world.

Exposing Open Web-UI to the internet:

I am not going to provide a detailed tutorial on this, as there are many others who have done so; however, Network Chuck did go through this in detail. However, make sure to define port 8080 at the end of your IP when setting up your URL.

At this point now you should have access to your AI trough the internet from anywhere!